Technical Description

The following sections discuss some of the technical details of a Scyld cluster, such as the compute node boot procedure, the BProc distributed process space and BeoMaster process migration software, compute node categories and states, and miscellaneous components.

Compute Node Boot Procedure

The Scyld cluster architecture is designed around light-weight provisioning of compute nodes using the master node's kernel and Linux distribution. Network booting ensures that what is provisioned to each compute node is properly version-synchronized across the cluster.

Earlier Scyld distributions supported a 2-phase boot sequence. Following PXE boot of a node, a fixed Phase 1 kernel and initial RAM disk (initrd) were copied to the node and installed. Alternatively, this Phase 1 kernel and initrd were used to boot from local hard disk or removable media. This Phase 1 boot package then built the node root file system rootfs in RAM disk, requested the run-time (Phase 2) kernel and used 2-Kernel-Monte to switch to it, then loaded the Scyld daemons and initialized the BProc system. Means were provided for installing the Phase 1 boot package on local hard disk and on removable floppy and CD media.

Beginning with Scyld 30-series, PXE is the supported method for booting nodes into the cluster. For some years, all servers produced have supported PXE booting. For servers that cannot support PXE booting, Scyld ClusterWare provides the means to easily produce Etherboot media on floppy disk or CD to use as compute node boot media.

Scyld Beowulf Series 30 and Scyld ClusterWare HPC do not support booting of compute nodes from local hard disk, although this can be configured. If this is required, contact Scyld Customer Support.

The Boot Package

The compute node boot package consists of the kernel, initrd, and rootfs for each compute node. The beoboot command builds this boot package.

By default, the kernel is the one currently running on the master node. However, other kernels may be specified to the beoboot command and recorded on a node-by-node basis in the Beowulf configuration file. This file also includes the kernel command line parameters associated with the boot package. This allows each compute node to potentially have a unique kernel, initrd, rootfs, and kernel command lines.

| Note that if you specify a different kernel to boot specific compute nodes, these nodes cannot be part of the BProc unified process space. |

The path to the initrd and rootfs are passed to the compute node on the kernel command line, where it is accessible to the booting software.

Each time the Beowulf services restart on the master node, the beoboot command is run to recreate the default compute node boot package. This ensures that the package contains the same versions of the components as are running on the master node.

Booting a Node

A compute node begins the boot process by sending a PXE request over the cluster private network. This request is handled by Beoserv on the master node. Beoserv provides the compute node with an IP address and (based on the contents of the Beowulf configuration file) a kernel and initrd. If the cluster config file does not specify a kernel and initrd for a particular node, then the defaults are used.

The cluster config file specifies the path to the kernel, the initrd, and the rootfs. The initrd contains the minimal set of programs for the compute node to establish a connection to the master and request additional files. The rootfs is an archive of the root file system, including the file system directory structure and certain necessary files and programs, such as the BProc module and BPslave daemon.

Beoserv logs the entire dialog with the compute node, including its MAC address, all of the node's requests, and the responses. This facilitates debugging of compute node booting problems.

The initrd

Once the initrd is loaded, control is transferred to the kernel. Within the Scyld architecture, booting is tightly controlled by a program called nodeboot, which also serves as the compute node's init process. There is no shell and no initialization directories or scripts on the compute nodes. The entire boot process is directed and controlled through the nodeboot program and through scripts on the master node that are remotely executed on the compute node.

The nodeboot program loads the network driver for the private cluster interface, and then again requests the IP address. This establishes a "real" connection to the master node using the TCP/IP stack and NIC driver.

Nodeboot then starts the kernel logging daemon, forwarding all kernel messages from the compute node's /var/log/boot and /var/log/messages files to the master node, where they are prefixed with the identity of the compute node. To facilitate debugging node booting problems, the kernel logging daemon is started as soon as the network driver is loaded and the network connection to the master node is established.

The rootfs

Once the network connection to the master node is established and kernel logging has been started, nodeboot requests the rootfs archive, using the path passed on the kernel command line. Beoserv provides the rootfs tarball, which is then uncompressed and expanded into a RAM disk.

Nodeboot adds additional dynamic content to the directory structure, then loads the BProc module and the BPslave daemon. Nodeboot then assumes the role of init process, waiting for BPslave to terminate, re-parenting orphan processes, and reaping terminated processes.

BPslave

The BPslave daemon establishes a connection to BPmaster on the master node, and indicates that the compute node is ready to begin accepting work. BPmaster then launches the node_up script, which runs on the master node but completes initialization of the compute node using the BProc commands (bpsh, bpcp, and bpctl).

BProc Distributed Process Space

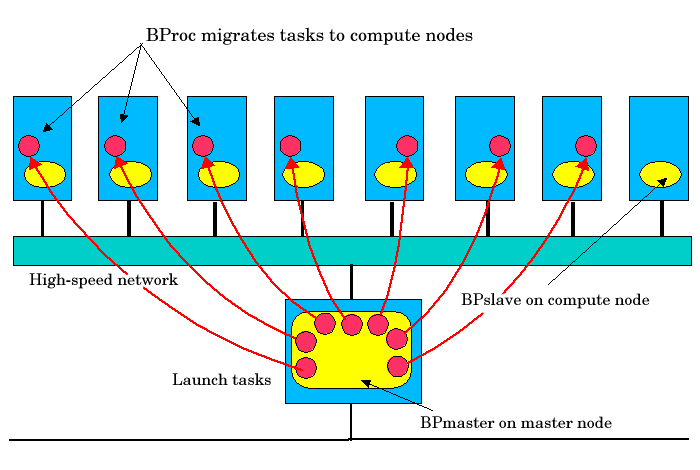

Scyld BeoMaster is able to provide a single system image through its use of BProc, the Scyld process space management kernel enhancement. BProc enables the processes running on cluster compute nodes to be visible and manageable on the master node. Processes start on the master node and are migrated to the appropriate compute node by BProc process migration code. Process parent-child relationships and UNIX job control information are maintained with the migrated jobs, as follows:

All processes appear in the master node's process table.

All standard UNIX signals (kill, suspend, resume, etc.) can be sent to any process on a compute node from the master.

The stdin, stdout and stderr output from jobs is redirected back to the master through a socket.

BProc is one of the primary features that makes a Scyld cluster different from a traditional Beowulf cluster. It is the key software component that makes compute nodes appear as attached computational resources to the master node. The figure below depicts the role BProc plays in a Scyld cluster.

BProc itself is divided into two components:

BPmaster — a daemon program that runs on the master node at all times

BPslave — a daemon program that runs on each of the compute nodes

The BPmaster daemon uses a process migration module (VMADump in older Scyld systems or TaskPacker in newer Scyld systems) to freeze a running process so that it can be transferred to a remote node. The same module is also used by the BPslave daemon to thaw the process after it has been received. In a nutshell, the process migration module saves or restores a process's memory space to or from a stream. In the case of BProc, the stream is a TCP socket connected to the remote machine.

VMADump and TaskPacker implement an optimization that greatly reduces the size of the memory space required for storing a frozen process. Most programs on the system are dynamically linked; at run-time, they will use mmap to map copies of various libraries into their memory spaces. Since these libraries are demand paged, the entire library is always mapped even if most of it will never be used. These regions must be included when copying a process's memory space and included again when the process is restored. This is expensive, since the C library dwarfs most programs in size.

For example, the following is the memory space for the program sleep. This is taken directly from /proc/pid/maps.

08048000-08049000 r-xp 00000000 03:01 288816 /bin/sleep 08049000-0804a000 rw-p 00000000 03:01 288816 /bin/sleep 40000000-40012000 r-xp 00000000 03:01 911381 /lib/ld-2.1.2.so 40012000-40013000 rw-p 00012000 03:01 911381 /lib/ld-2.1.2.so 40017000-40102000 r-xp 00000000 03:01 911434 /lib/libc-2.1.2.so 40102000-40106000 rw-p 000ea000 03:01 911434 /lib/libc-2.1.2.so 40106000-4010a000 rw-p 00000000 00:00 0 bfffe000-c0000000 rwxp fffff000 00:00 0 |

The total size of the memory space for this trivial program is 1,089,536 bytes; all but 32K of that comes from shared libraries. VMADump and TaskPacker take advantage of this; instead of storing the data contained in each of these regions, they store a reference to the regions. When the image is restored, mmap will map the appropriate files to the same memory locations.

In order for this optimization to work, VMADump and TaskPacker must know which files to expect in the location where they are restored. The bplib utility is used to manage a list of files presumed to be present on remote systems.

Compute Node Categories

Each compute node in the cluster is classified into one of three categories by the master node: "configured", "ignored", or "unknown". The classification of a node is dictated by whether or where it is listed in one of the following files:

The cluster config file /etc/beowulf/config (includes both "configured" and "ignored nodes")

The unknown addresses file /var/beowulf/unknown_addresses (includes "unknown" nodes only)

When a compute node completes its initial boot process, it begins to send out DHCP requests on all the network interface devices that it finds. When the master node receives a DHCP request from a new node, the new node will automatically be added to the cluster as "configured" until the maximum configured node count is reached. After that, new nodes will be classified as "ignored". Nodes will be considered "unknown" only if BeoSetup isn't configured to auto-insert or auto-append new nodes.

The cluster administrator can change the default node classification behavior using the BeoSetup program or by manually editing the /etc/beowulf/config file. The classification of any specific node can also be changed manually by the cluster administrator. See the Chapter called Configuring the Cluster with BeoSetup for information on configuring the cluster with BeoSetup and the Chapter called Configuring the Cluster Manually for information on configuring the cluster manually. Also see the Appendix called Special Directories, Configuration Files, and Scripts to learn about special directories, configuration files, and scripts.

Following are definitions of the node categories.

- Configured

A "configured" node is one that is listed in the cluster config file /etc/beowulf/config using the node tag. These are nodes that are formally part of the cluster, and are recognized as such by the master node. When running jobs on your cluster, the "configured" nodes are the ones actually used as computational resources by the master.

- Ignored

An "ignored" node is one that is listed in the cluster config file /etc/beowulf/config using the ignore tag. These nodes are not considered part of the cluster, and will not receive the appropriate responses from the master during their boot process. New nodes that attempt to join the cluster after it has reached its maximum configured node count will be automatically classified as "ignored".

The cluster administrator can also classify a compute node as "ignored" if for any reason you'd like the master node to simply ignore that node. For example, you may choose to temporarily reclassify a node as "ignored" while performing hardware maintenance activities when the node may be rebooting frequently.

- Unknown

An "unknown" node is one not formally recognized by the cluster as being either "configured" or "ignored". When the master node receives a DHCP request from a node not already listed as "configured" or "ignored" in the cluster configuration file, and BeoSetup isn't configured to auto-insert or auto-append new nodes, it classifies the node as "unknown". The node will be listed in the /var/beowulf/unknown_addresses file.

Compute Node States

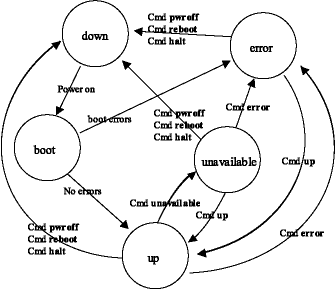

Cluster compute nodes may be in any of several functional states, such as down, up, or unavailable. Some of these states are transitional (boot or shutdown); some are informational variants of the up state (unavailable and error). BProc actually handles only 3 node operational variations:

The node is not communicating — down. Variations of the down state may record the reason, such as halted (known to be halted by the master) or reboot (the master shut down the node with a reboot command).

The node is communicating — up, unavailable, or error. Here the strings indicate different levels of usability.

The node is transitioning — boot. This state has varying levels of communication, operating on scripted sequence.

During a normal power-on sequence, the user will see the node state change from down to boot to up. Depending on the machine speed, the boot phase may be very short and may not be visible due to the update rate of BeoStatus. All state information is reset to down whenever the BPmaster daemon is started/restarted.

In the following diagram, note that these states can also be reached via imperative commands such as bpctl. This command can be used to put the node into the error state, such as in response to an error condition detected by a script.

Following are definitions of the compute node states:

- down

From the master node's view, down means only that there is no communication with the compute node. A node is down when it is powered off, has been halted, has been rebooted, has a network link problem, or has some other hardware problem that prevents communication.

- boot

This is a transitional state, during which the node will not accept user commands. The boot state is set when the node_up script has started and will transition to up or error when the script has completed. While in the boot state, the node will respond to administrator commands, but indicates that the node is still being configured for normal operation. The duration of this state varies with the complexity of the node_up script.

- up

This is a functional state, set when the node_up script has completed without encountering any errors. BProc checks the return status of the script and sets the node state to up if the script was successful. This is the only state where the node is available to non-administrative users, as BProc checks this before moving any program to a node; administrator programs bypass this check. This state may also be commanded when the previous state was unavailable or error.

- error

This is an informational state, set when the node_up script has exited with errors. The administrator may access the node, or look in the /var/log/beowulf/node.x file to determine the problem. If a problem is seen to be non-critical, the administrator may then set the node to up.

- unavailable

This is a functional state. The node is not available for non-administrative users; however, it is completely available to the administrator. Currently running jobs will not be affected by a transition to this state. With respect to job control, this state comes into play only when attempting to run new jobs, as new jobs will fail to migrate to a node marked unavailable. This state is intended to allow node maintenance without having to bring the node offline.

Miscellaneous Components

Scyld ClusterWare includes several miscellaneous components, such as name lookup functionality (BeoNSS), IP communications ports, library caching, and external data access.

BeoNSS

BeoNSS provides name service lookup functionality for Scyld ClusterWare. The information it provides includes hostnames, netgroups, and user information.

- Hostnames

BeoNSS provides dynamically generated hostnames for all the nodes in the cluster. The hostnames are of the form .<nodenumber>, so the hostname for node 0 would be ".0", the hostname for node 50 would be ".50", and the hostname for the master node would be ".-1".

BeoNSS also provides the hostname "master", which always points to the IP of the master node on the cluster's internal network. The hostnames ".-1" and "master" will always point to the same IP.

These hostnames will always point to the right IP address based on the configuration of your IP range. You don't need to do anything special for these hostnames to work. Also, these hostnames will work on the master node or any of the compute nodes.

Note that BeoNSS does not know the hostname and IP address that the master node uses for the outside network. If your master node has the name "mycluster" and uses the IP address 1.2.3.4 for the outside network, when you are on a compute node you will be unable to open a connection to "mycluster" or 1.2.3.4. However, you will be able to connect to "mycluster" or "-.1" if IP forwarding is enabled in both the /etc/beowulf/config file and the /etc/sysctl.conf file.

When you enable IP forwarding, you can connect to the master by using its public IP address or well-known hostname, provided you have your DNS services working and available on the compute nodes. Also, you can have your compute nodes make connections outside of the cluster. The master node will set up NAT routing between your compute nodes and the outside world, so your compute nodes will be able to make outbound connections. However, this does not enable outsiders to access or "see" your compute nodes.

On compute nodes, NFS directories must be mounted using either the IP address or the "$MASTER" keyword; the hostname cannot be used. This is because fstab is evaluated before /etc is populated on the compute node, so hostnames cannot be resolved at that point.

- Netgroups

Netgroups are a concept from NIS. They make it easy to specify an arbitrary list of machines, then treat all those machines the same when carrying out an administrative procedure (for example, specifying what machines to export NFS file systems to).

BeoNSS creates one netgroup called cluster, which includes all of the nodes in the cluster. This is used in the default /etc/exports file in order to easily export /home to all of the compute nodes.

- User Information

When jobs are running on the compute nodes, BeoNSS allows the standard getpwnam() and getpwuid() functions to successfully retrieve information (such as username, home directory, shell, and uid), as long as these functions are retrieving information on the user that is running the program. All other information that getpwnam() and getpwuid() would normally retrieve will be set to "NULL".

IP Communications Ports

Scyld ClusterWare uses a few TCP/IP and UDP/IP communication ports when sending information between nodes. Normally, this should be completely transparent to the user. However, if the cluster is using a switch that blocks various ports, it may be important to know which ports are being used and for what.

Following are key components of Scyld ClusterWare and the ports they use:

Beoserv — This daemon is responsible for replying to the DHCP request from a compute node when it is booting. The reply includes a new kernel, the kernel command line options, and a small final boot RAM disk. The daemon supports both multi-cast and uni-cast file serving.

By default, Beoserv uses TCP port 1556. This can be overridden by changing the value of the server directive in the /etc/beowulf/config file to the desired transport mode and port number.

BProc — This ClusterWare component provides unified process space, process migration, and remote execution of commands on compute nodes. By default, BProc uses TCP port 2223.

Beostat — This service collects performance metrics and status information from compute nodes and caches this information on the master node. It uses multi-cast address 224.27.0.1 and 224.27.0.2 on UDP port 3040.

Library Caching

One of the features Scyld ClusterWare uses to improve the performance of transferring jobs to and from compute nodes is to cache libraries. When BProc needs to migrate a job between nodes, it uses the process migration code (VMADump or TaskPacker) to take a snapshot of all the memory the process is using, including the binary and shared libraries. This memory snapshot is then sent across the private cluster network during process migration.

VMADump and TaskPacker take advantage of the fact that libraries are being cached on the compute nodes. The shared library data is not included in the snapshot, which reduces the amount of information that needs to be sent during process migration. By not sending over the libraries with each process, Scyld ClusterWare is able to reduce network traffic, thus speeding up cluster operations.

External Data Access

There are several common ways for processes running on a compute node to access data stored externally to the cluster, as discussed below.

Transfer the data. You can transfer the data to the master node using a protocol such as scp or ftp, then treat it as any other file that resides on the master node.

Access the data through a network file system, such as NFS or AFS. Any remote file system mounted on the master node can't be re-exported to the compute node. Therefore, you need to use another method to access the data on the compute nodes. There are two options:

Use bpsh to start your job, and use shell redirection on the master node to send the data as stdin for the job

Use MPI and have the rank 0 job read the data, then use MPI's message passing capabilities to send the data.

NFS mount directories from external file servers. There are two options:

For file servers directly connected to the cluster private network, this can be done directly, using the file server's IP address. Note that the server name cannot be used, because the name service is not yet up when fstab is evaluated.

For file servers external to the cluster, setting up IP forwarding on the master node allows the compute nodes to mount exported directories using the file server's IP address.

Use a cluster file system. If you have questions regarding the use of any particular cluster file system with Scyld ClusterWare, contact Scyld Customer Support for assistance.