Scyld ClusterWare Design Description

This chapter discusses the design behind Scyld ClusterWare, beginning with a high-level description of the system architecture for the cluster as a whole, including the hardware context, network topologies, data flows, software context, and system level files. From there, the discussion moves into a technical description that includes the compute node boot procedure, the process migration technology, compute node categories and states, and miscellaneous components. Finally, the discussion focuses on the ClusterWare software components, including tools, daemons, clients, and utilities.

As mentioned in the preface, this document assumes a certain level of knowledge from the reader and therefore, it does not cover any system design decisions related to a basic Linux system. In addition, it is assumed the reader has a general understanding of Linux clustering concepts and how the second generation Scyld ClusterWare system differs from the traditional Beowulf. For more information on these topics, see the User's Guide.

System Architecture

Scyld ClusterWare provides a software infrastructure designed specifically to streamline the process of configuring, administering, running, and maintaining commercial production Linux cluster systems. Scyld ClusterWare installs on top of a standard Linux distribution on a single node, allowing that node to function as the control point or "master node" for the entire cluster of "compute nodes".

This section discusses the Scyld ClusterWare hardware context, network topologies, system data flow, system software context, and system level files.

System Hardware Context

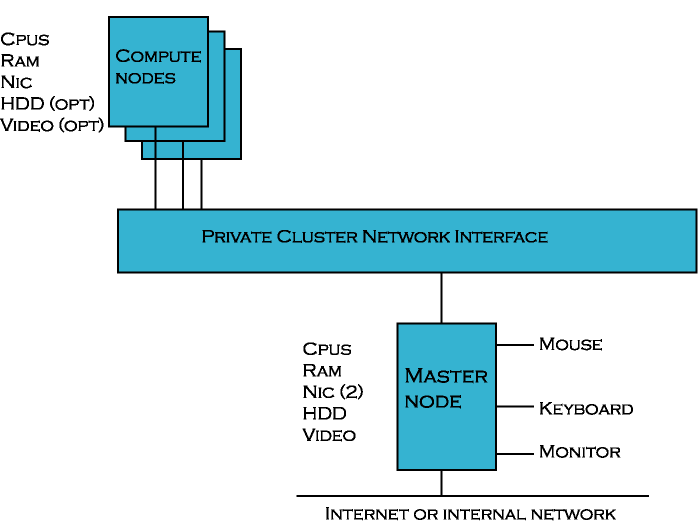

A Scyld cluster has three primary components:

The master node

Compute nodes

The cluster private network interface

The master node and compute nodes have different roles in Scyld ClusterWare, and thus they have different hardware requirements. The master node is the central administration console for the cluster; it is the machine that all users of the cluster log into for starting their jobs. The master node is responsible for sending these jobs out to the appropriate compute node(s) for execution. The master node also performs all the standard tasks of a Linux machine, such as queuing print jobs or running shells for individual users.

Master Node

Given the role of the master node, it is easy to see why its hardware closely resembles that of a standard Linux machine. The master node will typically have the standard human user interface devices such as a monitor, keyboard, and mouse. It may have a fast 3D video card, depending on the cluster's application.

The master is usually equipped with two network interface cards (NICs). One NIC connects the master to the cluster's compute nodes over the private cluster network, and the other NIC connects the master to the outside world.

The master should be equipped with enough hard disk space to satisfy the demands of its users and the applications it must execute. The Linux operating system and Scyld ClusterWare together use about 7 GB of disk space. We recommend at least a 20 GB hard disk for the master node.

The master node should contain a minimum of 512 MB of RAM, or enough RAM to avoid swap during normal operations; 1 GB is recommended. Having to swap programs to disk will degrade performance significantly, and RAM is relatively cheap.

Any network attached storage should be connected to both the private cluster network and the public network through separate interfaces.

In addition, if you plan to create boot CDs for your compute nodes, the master node requires a CD-RW or writeable DVD drive.

Compute Nodes

In contrast to the master node, the compute nodes are single-purpose machines. Their role is to run the jobs sent to them by the master node. If the cluster is viewed as a single large-scale parallel computer, then the compute nodes are its CPU and memory resources. They don't have any login capabilities and aren't running any of the daemons typically found on a standard Linux box. Since it's impossible to log into a compute node, these nodes don't need a monitor, keyboard, or mouse.

Video cards aren't required for compute nodes either (but may be required by the BIOS). However, having an inexpensive video card installed may prove cost effective when debugging hardware problems.

To facilitate debugging of hardware and software configuration problems on compute nodes, Scyld ClusterWare provides forwarding of all kernel log messages to the master's log, and all messages generated while booting a compute node are also forwarded to the master node. Another hardware debug solution is to use a serial port connection back to the master node from the compute nodes. The kernel command line options for a compute node can be configured to display all boot information to the serial port.

We recommend a minimum of 512 MB of RAM for each compute node to accommodate the uncompressed root file system. Beyond this amount, the RAM in a compute node is typically sized to avoid swap during normal operations. Many users have installed sufficient RAM to create a large RAM disk to hold their database or other large data files; this avoids using the much slower disk drives for file access.

The hard drive is not a required component for a compute node, but it must be used for swap space if all data will not fit into physical memory. If local disks are required on the compute nodes, we recommend using them for storing data that can be easily re-created, such as scratch storage or local copies of globally-available data.

If the compute nodes do not support PXE boot, a floppy (32-bit architectures) or bootable CD-ROM drive (32- and 64-bit architectures) is required.

Network Topologies

For many applications that will be run on Scyld ClusterWare, an inexpensive Ethernet network is all that is needed. Other applications might require multiple networks to obtain the best performance; these applications generally fall into two categories, "message intensive" and "server intensive". The following sections describe a minimal network configuration, a performance network for "message intensive" applications, and a server network for "server intensive" applications.

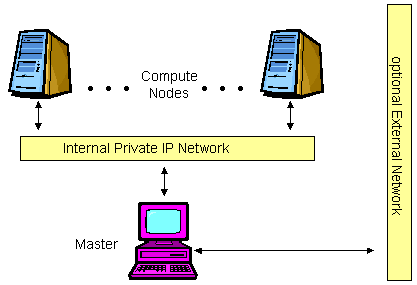

Minimal Network Configuration

Scyld ClusterWare requires that at least one IP network be installed to enable master and compute node communications. This network can range in speed from 10 Mbps (Fast Ethernet) to over 1 Gbps, depending on cost and performance requirements.

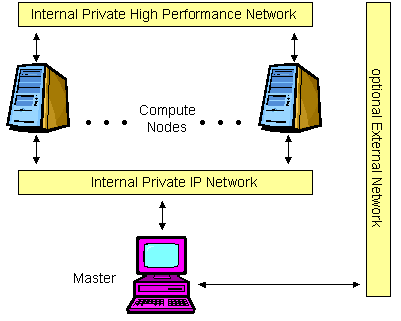

Performance Network Configuration

The performance network configuration is intended for applications that can benefit from the low message latency of proprietary networks like Infiniband, TOE Ethernet, or RDMA Ethernet. These networks can optionally run without the overhead of an IP stack with direct memory-to-memory messaging. Here the lower bandwidth requirements of the Scyld software can be served by a standard IP network, freeing the other network from any OS-related overhead completely.

It should be noted that these high performance interfaces may also run an IP stack, in which case they may also be used in the other configurations as well.

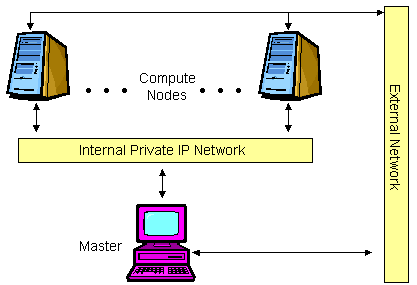

Server Network Configuration

The server network configuration is intended for web, database, or application servers. In this configuration, each compute node has multiple network interfaces, one for the private control network and one or more for the external public networks.

The Scyld ClusterWare security model is well-suited for this configuration. Even though the compute nodes have a public network interface, there is no way to log into them. There is no /etc/passwd file or other configuration files to hack. There are no shells on the compute nodes to execute user programs. The only open ports on the public network interface are the ones your specific application opened.

To maintain this level of security, you may wish to have the master node on the internal private network only. The setup for this type of configuration is not described in this document, because it is very dependent on your target deployment. Contact Scyld's technical support for help with a server network configuration.

System Data Flow

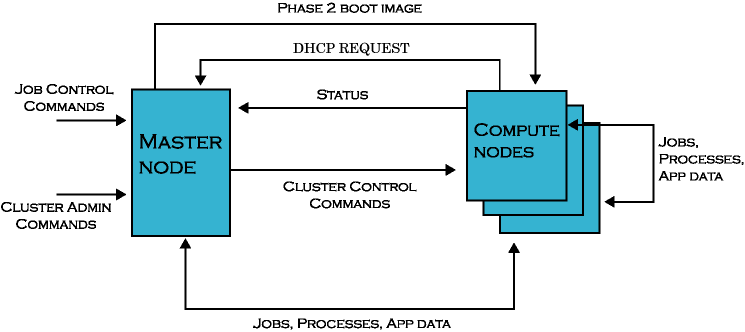

The following data flow diagram shows the primary messages sent over the private cluster network between the master node and compute nodes in a Scyld cluster. Data flows in three ways:

From the master node to the compute nodes

From the compute nodes to the master node

From the compute nodes to other compute nodes

The job control commands and cluster admin commands shown in the data flow diagram represent inputs to the master from users and administrators.

Master Node to Compute Node

Following is a list of the data items sent from the master node to a compute node, as depicted in the data flow diagram.

Cluster control commands — These are the commands sent from the master to the compute node telling it to perform such tasks as rebooting, halting, powering off, etc.

Files to be cached — The master node send the files to be cached on the compute nodes under Scyld JIT provisioning.

Jobs, processes, signals, and app data — These include the process snapshots captured by BeoMaster for migrating processes between nodes, as well as the application data sent between jobs. BeoMaster is the collection of software that makes up Scyld, including Beoserv for PXE/DHCP, BProc, Beomap, BeoNSS, and Beostat.

Final boot images — The final boot image (formerly called the Phase 2 boot image) is sent from the master to a compute node in response to its Dynamic Host Configuration Protocol (DHCP) requests during its boot procedure.

Compute Node to Master Node

Following is a list of the data items sent from a compute node to the master node, as depicted in the data flow diagram.

DHCP and PXE requests — These requests are sent to the master from a compute node while it is booting. In response, the master replies back with the node's IP address and the final boot image.

Jobs, processes, signals, and app data — These include the process snapshots captured by BeoMaster for migrating processes between nodes, as well as the application data sent between jobs.

Performance metrics and node status — All the compute nodes in a Scyld cluster send periodic status information back to the master.

Compute Node to Compute Node

Following is a list of the data items sent between compute nodes, as depicted in the data flow diagram.

Jobs, processes, app data — These include the process snapshots captured by BeoMaster for migrating processes between nodes, as well as the application data sent between jobs.

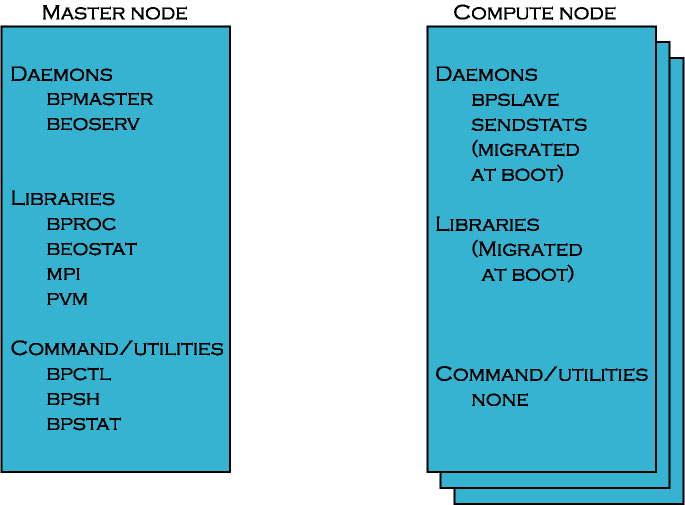

System Software Context

The following diagram illustrates the software components available on the nodes in a Scyld cluster.

Master Node Software Components

The master node runs the BPmaster and Beoserv daemons. This node also stores the Scyld-specific libraries libbproc and libbeostat, as well as Scyld-modified versions of utilities such as MPICH and PVM. The commands and utilities are a small subset of all the software tools available on the master node.

Compute Node Software Components

The compute nodes run the nodeboot program, which serves as the init process on the compute nodes and runs the Scyld klogd, BPslave, and sendstats daemons:

klogd is run as soon as the compute node establishes a network connection to the master node, ensuring that the master node begins capturing compute node kernel messages as early as possible.

BPslave is the compute node component of BProc, and is necessary for supporting the unified process space and for migrating processes.

sendstats is necessary for monitoring the load on the compute node, and for monitoring its health.

System Level Files

The following sections briefly describe the system level files found on the master node and compute nodes in a Scyld cluster.

Master Node Files

The file layout on the master node is the layout of the base Linux distribution. For those who are not familiar with the file layout that is commonly used by Linux distributions, here are some things to keep in mind:

/bin, /usr/bin — directories with user level command binaries

/sbin, /usr/sbin — directories with administrator level command binaries

/lib, /usr/lib — directories with static and shared libraries

/usr/include — directory with include files

/etc — directory with configuration files

/var/log — directory with system log files

/usr/share/doc — directory with various documentation files

Scyld ClusterWare also has some special directories on the master node that are useful to know about. The status logs for compute nodes are stored in /var/log/beowulf. The individual files are named node.#, where # is replaced by the node's identification number as reported by the BeoSetup tool, discussed in the Chapter called Configuring the Cluster with BeoSetup. Additional status messages and compute node kernel messages are written to the master's /var/log/messages file.

Configuration files for Scyld ClusterWare are found in /etc/beowulf. The directory /usr/lib/beoboot/bin contains the node_up script and various helper scripts that are used to configure compute nodes during boot.

For more information on the special directories, files, and scripts used by Scyld ClusterWare, see the Appendix called Special Directories, Configuration Files, and Scripts. Also see the Reference Guide.

Compute Node Files

Only a very few files exist on the compute nodes. For the most part, these files are all dynamic libraries; there are almost no actual binaries. For a detailed list of exactly what files exist on the compute nodes, see the Appendix called Special Directories, Configuration Files, and Scripts.