Supporting Multiple Master Nodes

For ClusterWare 4.2.0 HPC and later releases, Scyld supports multiple master nodes within the cluster.

Many labs and workgroups today have several compute clusters, each one dedicated to different research teams or engineering groups, or used to run different applications. When an unusually large job needs to be run, it may be necessary to combine most or all of the nodes into a single large cluster, and then split up the cluster again when the job is completed. Also, the overall demand for particular applications may change over time, requiring changes in the allocation of nodes to applications.

Multiple master support in ClusterWare makes it easy to respond to these types of changing demands for computing resources through a controlled and methodical migration of nodes between masters. The Cluster Administrator can reassign idle compute nodes to a different master as needed, and put this change into effect at an appropriate time.

Static Partitioning of Compute Nodes Among Multiple Masters

/etc/beowulf/config declares the master node's interface to the cluster private network (using the keyword interface), the maximum number of compute nodes (nodes), their range of IP addresses on the cluster private network (iprange), and their MAC addresses (node). The ordering of these node MAC addresses defines their node numbers. By default, all compute nodes are managed by a single master node whose IP address can be determined by doing ifconfig `beoconfig interface` and looking for inet addr.

Alternatively, the compute nodes can be partitioned into sets of nodes, where each set is managed by a different master node which lives on the same cluster private network. This partitioning is defined in /etc/beowulf/config using the masterorder keyword.

Example 1. Configuring a Cluster with Two Masters

In this example, a 40-node cluster has a single master node at IP address 10.1.1.1. A new master has just been added to the cluster, sharing the same cluster private network and subnet (net mask) with the original cluster. The private interface for the new master is set to an address different from the original master, and outside the range of the compute nodes (for example, 10.1.1.2).

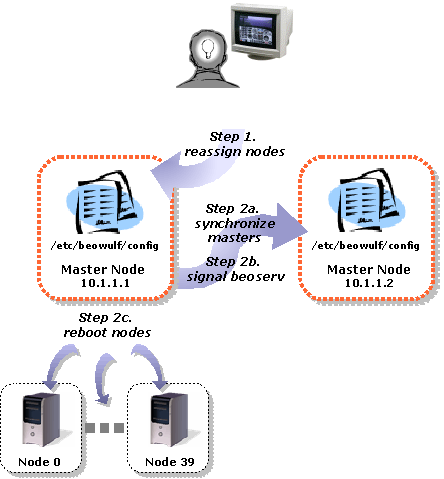

Here's how to change the configuration of the cluster so that each master controls half the compute nodes:

Edit /etc/beowulf/config on the original master at 10.1.1.1. Set the masterorder entries to read as follows:

masterorder 0-19 10.1.1.1 masterorder 20-39 10.1.1.2

Replicate these same masterorder entries and all node entries on the new master in its /etc/beowulf/config file. The 40 node entries define a common naming and ordering of the compute nodes between the two master nodes.

Put this change into effect:

Send SIGHUP to the beoserv daemon on the new master, which tells it to re-read /etc/beowulf/config to see the new changes:

[root@cluster ~] # ssh 10.1.1.2 killall -HUP beoserv

Similarly, on the first master at 10.1.1.1, do the same thing to its beoserv daemon:

[root@cluster ~] # killall -HUP beoserv

On the master at 10.1.1.1, which is the master for nodes 0 through 19, issue the bpctl -R command with the appropriate node range to reboot the nodes that have been reassigned to the second master:

[root@cluster ~] # bpctl -S 20-39 -R

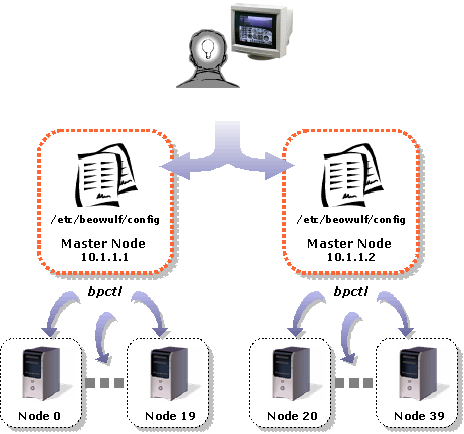

Nodes 0-19 continue to be managed by the first master node, and now nodes 20-39 reboot and are managed by the second master node.

In order to reassign a compute node from one master to another, you must perform the same basic steps: edit the masterorder entries, replicate the changes across all master nodes on the network, invoke killall -HUP beoserv on all master nodes, and reboot the affected compute node(s) that were moved to a different master.

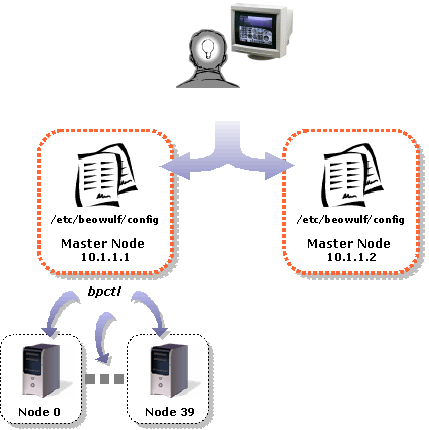

The following three diagrams illustrate the general process flow discussed in this example.