| Host Name |

hydra.ucdenver.pvt |

| Operating System |

CentOS release 6.7 (Final) |

| Cluster |

Scyld ClusterWare release 6.7.6 |

| Number Nodes |

16 computer nodes plus 1 master

node |

| Total CPU Cores |

204 (12 cores on master nodes

plus 192 cores in all 16 computer nodes) |

| Number GPUs |

8 Tesla Fermi GPUs |

| Total GPU CUDA Cores |

3584 cuda cores (8 x 448) 1.15 GHz per core |

| Total Max GFLOPS of CPUs |

480 (2.5 GFLOPS per core) |

| Total Disk Space | 7566 GB |

| Total RAM |

544 GB |

| Total RAM of GPUs |

27 GB |

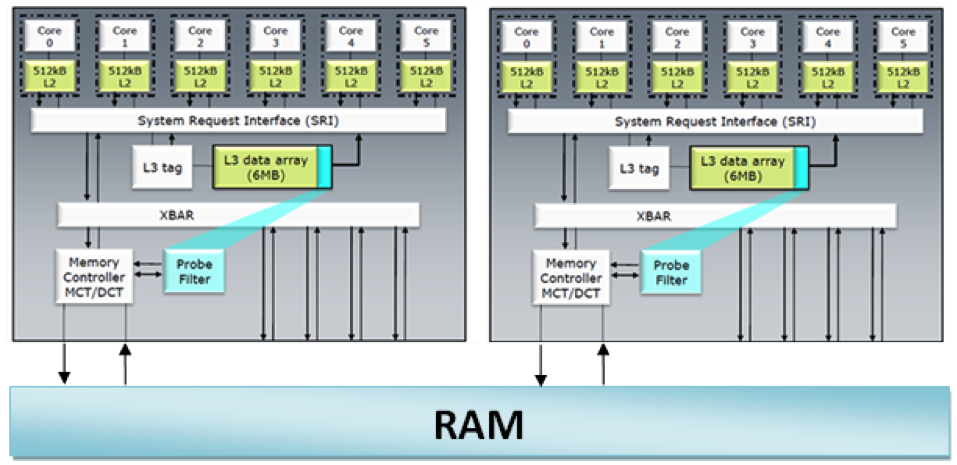

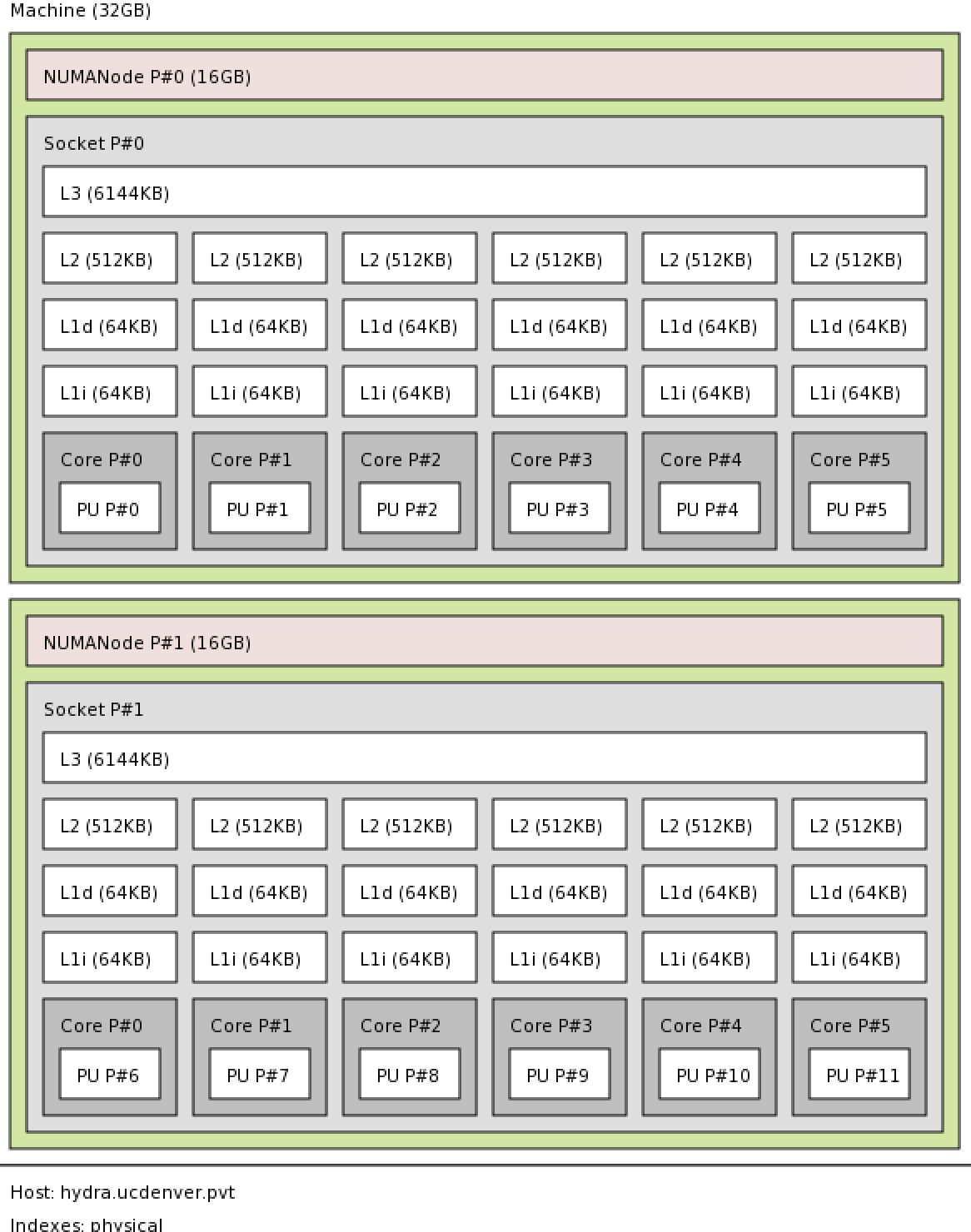

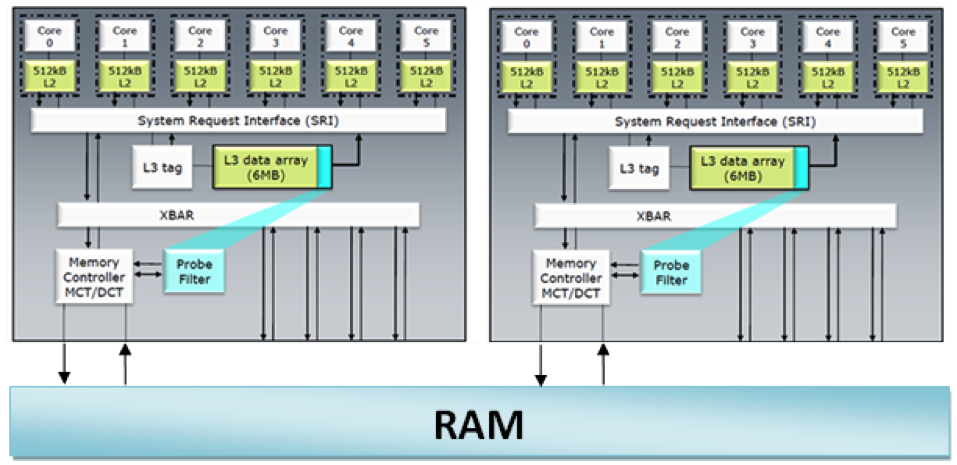

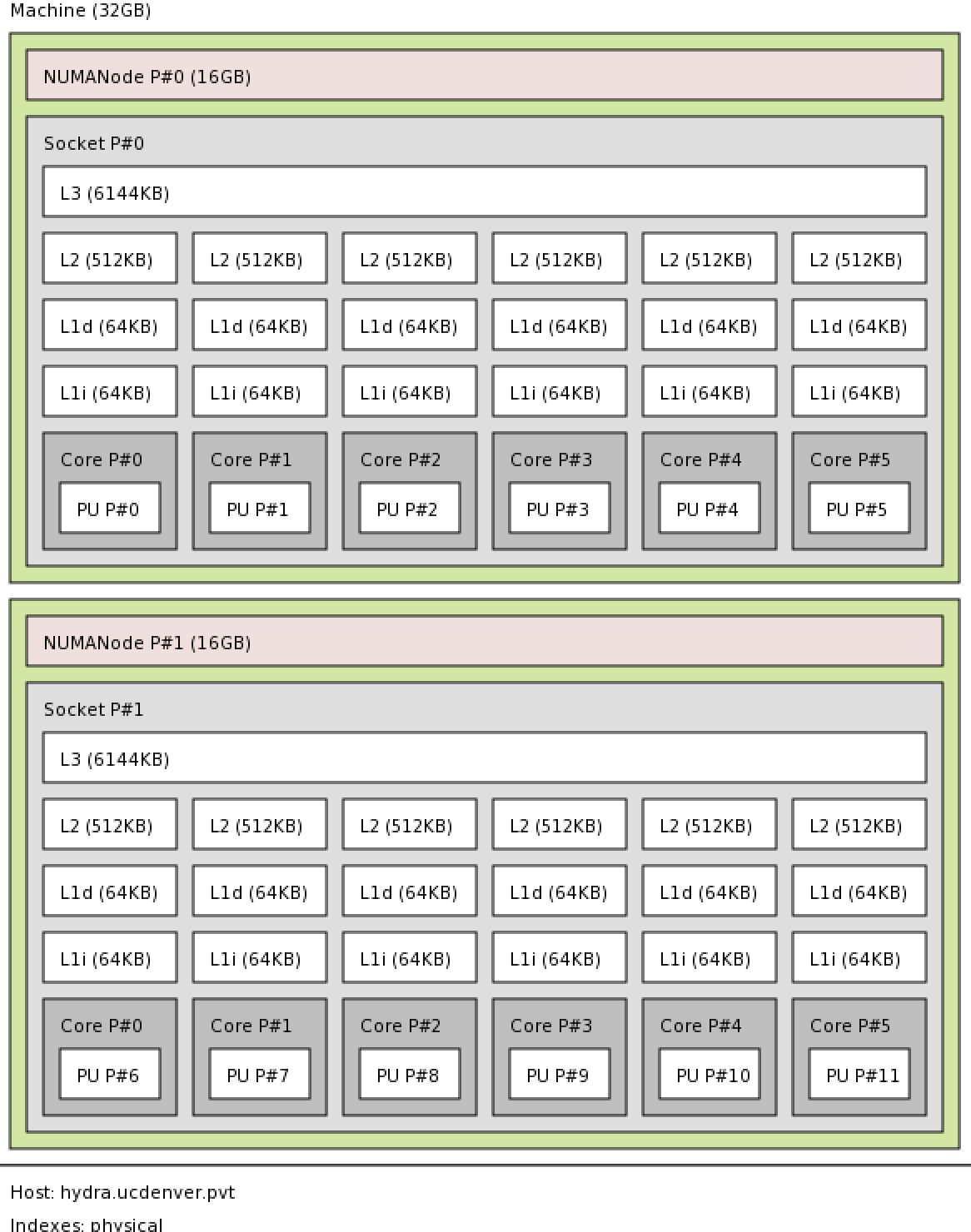

| Processors per Node |

2 x 6-core processors (Node 0 -

Node 15) |

| Cores per Node |

12 cores (Node 0 - Node 15) |

| Processor Type |

AMD Opteron 2427 (Node 0 - Node

15) |

| Processor Speed |

2.2 Ghz |

| L1 Instruction Cache per

Processor |

6 x 64 KB |

| L1 Data Cache per Processor |

6 x 64 KB |

| L2 Cache per Processor |

6 x 512KB |

| L3 Cache per Processor |

6MB |

| 64 bit Support |

yes |

| RAM on Master Node |

32 GB |

| Disk Space on Master Node |

238 GB (RAID1) 2,861GB (RAID5) |

| CUDA

Driver Version / Runtime

Version

|

6.5 / 6.5 |

| CUDA Capability Major/Minor

version number |

2.0 |

| Total amount of global memory |

2687 MBytes (2817982464 bytes) |

| (14) Multiprocessors, ( 32) CUDA

Cores/MP |

448 CUDA Cores |

| GPU Clock rate |

1147 MHz (1.15 GHz) |

| Memory Clock rate |

1546 Mhz |

| Memory Bus Width |

384-bit |

| L2 Cache Size |

786432 bytes |

| Maximum Texture Dimension Size

(x,y,z) |

1D=(65536), 2D=(65536, 65535), 3D=(2048, 2048, 2048) |

| Total amount of constant memory |

65536 bytes |

| Total amount of shared memory

per block |

49152 bytes |

| Total number of registers

available per block |

32768 |

| Warp size |

32 |

| Maximum number of threads per

multiprocessor |

Maximum number of threads per

multiprocessor |

| Maximum number of threads per

block |

1024 |

| Max dimension size of a thread

block (x,y,z) |

(65535, 65535, 65535) |

| Integrated GPU sharing Host

Memory |

No |

| Compute Mode |

multiple host threads can use

::cudaSetDevice() with device simultaneously |